This Week in AI: A preview of Disrupt 2024’s stacked AI panels

Hiya, folks, welcome to TechCrunch’s regular AI newsletter. If you want this in your inbox every Wednesday, sign up

here

.

This week, the TechCrunch crew (including yours truly) is at TC’s annual Disrupt conference in San Francisco. We’ve got a packed lineup of speakers from the AI industry, academia, and policy, so in lieu of my usual op-ed I thought I’d preview some of the great content headed your way.

My colleague Devin Coldewey will be interviewing Perplexity CEO Aravind Srinivas onstage. The AI-powered search engine is riding high, recently hitting 100 million queries served per week — but it’s also being sued by News Corp’s Dow Jones over what the publisher describes as a “content kleptocracy.”

Kirsten Korosec, TC’s transportation editor, will chat with Zoox co-founder and CTO Jesse Levinson in a fireside. Levinson, who has been in the thick of autonomous car technology for a decade, is now preparing the Amazon-owned robotaxi company for its next big adventure — and we’ll be reporting on it.

We’ll also have a panel on how AI Is flooding the web with disinformation — featuring Meta Oversight Board member Pamela San Martin, Center for Countering Digital Hate CEO Imran Ahmed, and UC Berkeley CITRIS Policy Lab founder Brandie Nonnecke. The trio will discuss how, as generative AI tools become more widely available, they’re being abused by an array of actors, including state actors, to create deepfakes and sow disinformation.

And we’ll hear from Cara CEO Jingna Zhang, AI Now Institute co-executive director Sarah Myers West, and ElevenLabs’ Aleksandra Pedraszewska on AI’s legal and ethical minefields. AI’s meteoric rise has created new ethical dilemmas and exacerbated old ones, while lawsuits drop left and right. This threatens both new and established AI companies, and the creators and workers whose labor feeds the models. The panel will tackle all of this — and more.

That’s just a sampling of what’s on deck this week. Expect appearances from AI experts like U.S. AI Safety Institute director Elizabeth Kelly, California senator Scott Wiener, Berkeley AI policy hub co-director Jessica Newman, Luma AI CEO Amit Jain, Suno CEO Mikey Shulman, and Splice CEO Kakul Srivastava.

News

Apple Intelligence launches: Through a free software update, iPhone , iPad, and Mac users can access the first set of Apple’s AI-powered Apple Intelligence capabilities.

Bret Taylor’s startup raises new money : Sierra, the AI startup co-founded by OpenAI chairman Bret Taylor, has raised $175 million in a funding round that values the startup at $4.5 billion.

Google expands AI Overviews: Google Search’s AI Overviews, which display a snapshot of information at the top of the results page, are beginning to roll out in more than 100 countries and territories.

Generative AI and e-waste: The immense and quickly advancing computing requirements of AI models could lead to the industry discarding the e-waste equivalent of more than 10 billion iPhones per year by 2030, researchers project.

Open source, now defined: The Open Source Initiative, a long-running institution aiming to define and “steward” all things open source, this week released version 1.0 of its definition of open source AI.

Meta releases its own podcast generator: Meta has released an “open” implementation of the viral generate-a-podcast feature in Google’s NotebookLM.

Hallucinated transcriptions: OpenAI’s Whisper transcription tool has hallucination issues, researchers say. Whisper has reportedly introduced everything from racial commentary to imagined treatments into transcripts.

Research paper of the week

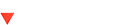

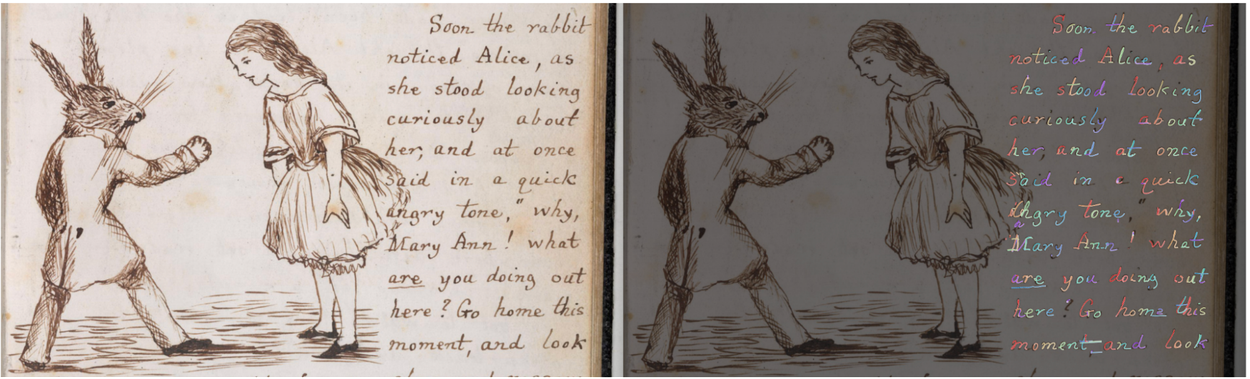

Google says it taught a model to convert photos of handwriting into “digital ink.”

The model, InkSight, was trained to recognize written words on a page and output strokes that roughly resemble handwriting. The goal, the Google researchers behind the project say, was to “capture the stroke-level trajectory details of handwriting” so that a user can store the resulting strokes in the note-taking app of their choice.

InkSight isn’t perfect. Google notes that it makes errors. But the company also claims that the model performs well across a range of scenarios, including challenging lighting conditions.

Let’s hope it’s not used to forge any signatures.

Model of the week

Cohere for AI, the nonprofit research lab run by AI startup Cohere, has released a new family of text-generating models called Aya Expanse. The models can write and understand text in 23 different languages, and Cohere claims they outperform models, including Meta’s Llama 3.1 70B, on certain benchmarks.

Cohere says a technique it’s dubbed “data arbitrage” was key in training Aya Expanse. Taking inspiration from how humans learn by going to different teachers for unique skills, Cohere selected particularly capable multilingual “teacher” models to generate synthetic training data for Aya Expanse.

Synthetic data has its issues. Some studies suggest that overreliance on it can lead to models whose quality and diversity get progressively worse. But Cohere says that data arbitrage effectively mitigates this. We’ll see soon enough if the claim holds up to scrutiny.

Grab bag

OpenAI’s Advanced Voice Mode , the company’s realistic-sounding voice feature for ChatGPT, is now available for free in the ChatGPT mobile app for users in the EU, Switzerland, Iceland, and Norway in addition to Liechtenstein. Previously, users in those regions had to subscribe to ChatGPT Plus to use Advanced Voice Mode.

A recent piece in The New York Times highlighted the upsides — and downsides — of Advanced Voice Mode, like its reliance on tropes and stereotypes when trying to communicate in the ways users ask. Advanced Voice Mode has blown up on TikTok for its uncanny ability to mimic voices and accents. But some experts warn it could lead to emotional dependency on a system that has no intelligence — or empathy, for that matter.